Usability Feedback in Education Software Prototypes:

A Contrast of Users and Experts

Ph.D. Thesis in the Department of Counseling, Educational Psychology, and Special Education, Michigan State University

Pericles Varella Gomes (gomes@pilot.msu.edu)

Completed Summer 1996

Faculty Committee:

Dr. Patrick Dickson (pdickson@msu.edu)

Dr. Dick McLeod (rmcleod@msu.edu)

Dr. Leighton Price (pricel@pilot.msu.edu)

Dr. Carrie Heeter (heeter@msu.edu)

Abstract

Designers can't guess exactly how users will respond to their interfaces and content. Users are not able to fully recognize or articulate what they like and don't like about software. Usability testers (who are usually not the original designers to allow more objectivity) try to gather insightful feedback and present it to the original designers to help them optimize software designs.

This study compares a variety of usability feedback methods gathering data from users and hypermedia designers asked to evaluate computer-based instruction prototypes. It provides information for defining cost-effective evaluation strategies and methods, and for specifying valid instruments and tools.

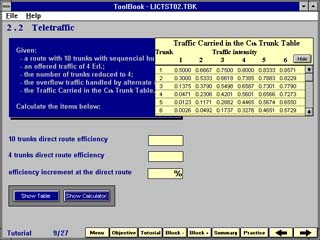

One of the screens of the prototype tested.

Data collected from users included quantitative usability instruments such as QUIS 5.5b - University of Maryland , qualitative reports using think-aloud evaluation techniques, and critical incident multimedia files to collect feedback. Critical incidents multimedia files were produced for each subject, with screen and audio grabs of problems encountered; navigational maps were generated for each subject; written comments about the prototype were collected; and a descriptive list of errors was generated, comparing types of errors encountered

Testing occurred with 16 target users (engineering students from the U.S.A.,China, Korea, India and Pakistan) and 5 educational hypermedia designers.

In addition to evaluating the software, the hypermedia designers also evaluated how useful each of the forms of collected user data would be to them.

User groups and designers were compared, as well as some more general trends, when all subjects were combined. Gender comparisons were also studied. In the quantitative side, descriptive statistics, non-parametric and cluster techniques were applied to the answers.

Designers reported that qualitative instruments in general were more useful. Designers were more critical both about the interface aspects and pedagogical dimensions and significantly found more errors. Designers were more efficient than users when executing the usability evaluation, but could not completely replace users (some errors were found only by users). Designers were better in the double task of trying to critique a new interface and learn about the content at the same time. The variability of feedback within users and within designers was high.

In terms of rating the software, Indian users were more forgiving; and the American group was the most critical. American users were more efficient in finding errors. Females were systematically more positive about the prototype.

Methodological considerations for further work include the relative usefulness of combining quantitative and qualitative methods; the issue of when to use designers as opposed to target users, and the importance of gathering information from different ethnic user groups when developing software for an international audience.

One major conclusion regarding the ratings of the instruments by the experts was that the best instruments were the ones that produced contextualized data, both in the quantitative and qualitative aspects (such as the multimedia files, list of problems, and demographic data).

Examples of Multimedia Critical incidents:

| User or Expert: | Critical Incidents (sounds) | Critical Incidents (screen grabs) |

|---|---|---|

| User 7 (China-male) * | sound (481k) | Screen |

| User 9 (USA-female) * | sound (216k) | Screen |

| User 12 (USA - male) * | sound (234k) | Screen |

| User 14 (India - male) * | sound (338k) | Screen |

| Expert 3 (male) * | sound (195k) | Screen |

| Expert 5 (male) * | sound (585k) | Screen |

* Click in the audio file first and then click in the screen grab. Depending on your Internet connection, the audio might take a while to start playing.